Abstract

This paper expounds on the importance of identifiabilty of subjective probabilities in agency theory with moral hazard. An application to insurance is examined.

Similar content being viewed by others

Introduction

Over the course of the past three decades, advances in the theory of incentive contracts improved our understanding of the theory of optimal insurance in the presence of moral hazard. In particular, when monitoring of the precautions taken to reduce the risk of loss is impractical, the theory highlights the tension between the desire to attain an efficient allocation of risk bearing and the need to create incentives for the insured to take costly measures to reduce the risk of loss. Second-best optimal insurance contracts balance the gain from the reduction in the overall risk against the efficiency loss in the allocation of risk bearing and the cost of implementing the self-protections measures.

By and large, incentive contracts, including insurance policies, are analyzed as a constrained optimization problem. Specifically, the uninformed party (the principal) is supposed to design a contract that maximizes his objective function; is acceptable to the informed party (the agent); and induces the agent, in pursuit of his self-interest, to take the actions desired by the principal. This analysis is based on the assumption that the principal knows the agent's preferences on the set of contracts and actions. It is this assumption that I revisit in this paper. In particular, I argue that, in a world in which new pertinent information becomes available that requires the adjustment of the terms of the contractual agreement, attaining the second-best solution is not enough that the principal knows the agent's prior preferences, it is not even enough that he knows that the agent is Bayesian in the sense of updating his prior beliefs using Bayes’ rule, the principal must know the agent's “true” prior probabilities or his posterior preferences contingent on any relevant information that may become available.

Until recently, this aspect of the moral hazard problem had not been dealt with in the literature on agency theory. In this paper, I review and discuss these issues and demonstrate, in the context of a simple insurance problem, the consequences of failing to meet these informational exigencies.

In the half century following the publication of Savage's (1954) The Foundations of Statistics, subjective expected utility theory provided the framework for the analysis of decision making under uncertainty. The essence of Savage's contribution is an axiomatization of choice behavior that mimics the maximization of the sum of the products of utilities of consequences and the subjective probabilities of events in which these consequences obtain. Savage's work, which builds on ideas and results by Ramsey (1927), de Finetti (1937), and von Neumann and Morgenstern (1944), includes a definition of subjective probabilities on events and utilities on consequences that are derived from the underlying preference structure and the additional convention that the utility of consequences is independent of the underlying events.

As I argue in Karni (2006, 2007b), the convention that the utilities of consequences are state-independent is not implied by Savage's axioms and, as a result, is not falsifiable by observed choice behavior. Put differently, while Savage's axioms require that the preference relation display state independence, they do not imply that the utility function is state independent.Footnote 1 In fact, Savage's model admits state-dependent utility functions that are linear transformations of one another.Footnote 2 Consequently, the subjective probabilities in Savage's model do not necessarily measure the decision maker's degree of beliefs about the likelihoods of events. This observation is at the heart of the ensuing discussion.

The second-best solution to insurance in the presence of moral hazard depends, in part, on the insured's beliefs about the likelihood of potential losses, his attitudes toward risk, and his willingness (or reluctance) to spend the resources necessary to reduce the risk of loss. New information affects the assessments of both the insured and the insurer regarding the likelihood of losses. In particular, if both parties are Bayesians, the change in their beliefs in light of new information is, in a sense, predictable. One is inclined, therefore, to suppose that if the insurer knows the insured's prior preferences and that the insured is Bayesian, then the insurer may ascribe to the insured Savage-type subjective probabilities; design an optimal incentive contract based on the prior beliefs; and, whenever new information arrives, update his own and the insured preferences by the application of Bayes’ rule, and design the new contract based on their posterior preferences. This supposition is false. As a matter of fact, it is possible that if Savage-type subjective probabilities do not represent the insured's beliefs, then the posterior preferences ascribed to him by the insurer are false and the incentive contract the insurer believes should induce the insured to make the necessary effort to reduce the risk of loss is incentive incompatible.

The moral of this is that there are situations – and I think they are many – in which Savage's notion of subjective probabilities is inadequate for the study of incentive contracts. This argument also reveals the immensity of the amount of information needed to address the incentive problem posed by moral hazard.

In Karni (2006, 2007a, 2007b) I developed an alternative analytical framework and axiomatic models that are appropriate for the analysis of the principal–agent relationship in the presence of moral hazard. My approach has the additional advantage of admitting situations analogous to state-dependent preferences. For Bayesian decision makers, this theory also pins down a unique family of action-dependent subjective probabilities that represent correctly the decision maker's beliefs.

In this paper, I show, by way of analyzing a simple insurance problem, the pitfalls of misrepresenting the beliefs of Bayesian decision makers and discuss the consequences for the theory of insurance in the presence of moral hazard. The insurance problem is stated in the next section, followed by a numerical analysis in the subsequent section. A discussion of the new approach to modeling decision making under uncertainty in the presence of moral hazard and event-dependent preferences appears in the penultimate section. The final section includes some concluding remarks.

Insurance with moral hazard

Prior beliefs, preferences, and optimal insurance

Consider a simple insurance problem. A consumer seeks to insure his property against theft. The risk of theft depends on the precautionary measures the consumer takes (e.g., putting on the club when he parks his car, making sure to lock his house). These measures are costly in terms of effort and cannot be monitored by the insurer.

To simplify the discussion, suppose that there are two possible actions corresponding to the two types of behavior: a0 corresponds to negligent behavior (i.e., failure to take the necessary precautions to protect the property against theft) and a1 corresponds to diligent behavior (i.e., always taking the necessary measures to protect the property against theft). Let θ denote the event “the insured property is stolen” and denote by θ′ the complementary event.

Insurance is provided by an expected profit-maximizing Bayesian insurer whose prior beliefs about the likelihood of theft, conditional on the precautions taken by the consumer, are represented by the prior probability πI(θ; a), a∈{a1, a0}.

The insurer (principal)–consumer (agent) relationship is governed by an insurance policy (β, α), where β denotes the net indemnity in the event of a loss due to theft and α denotes the insurance premium. In what follows, I describe the insurance policies that will be offered, taking into account the moral hazard problem and the changes in the terms of this policies if pertinent new information becomes available.

Let the consumer's initial endowment be (w, w′), where w denotes the consumer wealth in case he sustains a loss due to theft and w′ denotes his wealth otherwise. Thus (w′−w) represents the financial loss in the event θ. Let  be a function depicting the disutility (i.e., the utility cost) to the agent associated with the two actions, or modes of behavior, and assume, without essential loss of generality, that v1:=v(a1)>v(a0)=0.

be a function depicting the disutility (i.e., the utility cost) to the agent associated with the two actions, or modes of behavior, and assume, without essential loss of generality, that v1:=v(a1)>v(a0)=0.

Suppose that the consumer is a risk-averse, expected utility-maximizing, Bayesian decision maker whose prior preferences, ⪰ a c, a∈{a1, a0}, on insurance policies contingent on his actions are represented by the utility functions

where u denotes the consumer's von Neumann–Morgenstern utility function; γ(θ) measures the, subjective, “psychic” cost to the consumer if theft occurs; and πc(·; a) is the subjective probability distribution representing the consumer's prior beliefs regarding the likelihoods of θ and θ′ conditional on taking the action a.

Assume that the insurer knows the consumer's prior preferences and that the consumer is Bayesian. Suppose further that the insurer ascribes to the consumer probabilities and utilities as required by Savage's theory. In other words, the insurer assumes that the consumer's utility function is event independent. Then the insurer's perception of the consumer's objective function is, for a∈{a1, a0},

where π̂c(θ; a):=πc(θ; a)γ(θ)/Γ(a), π̂c(θ′; a):=πc(θ′; a)/Γ(a) and Γ(a):=γ(θ)πc(θ; a)+πc(θ′; a).Footnote 3 In other words, the insurer misrepresents the consumer's beliefs and ascribes to him a cost of action consisting of an additive component, v(·), and a multiplicative component, Γ(·).Footnote 4 Note, however, that even though the insurer ascribes to the consumer incorrect probabilities and his perception of the disutility of action is incorrect, the insurer's representations of the consumer's preferences, ⪰ a c, a∈{a1, a0}, are, nevertheless, correct.

I assume throughout that the consumer always has the option of declining to take out insurance and bearing the risk associated with theft. If the moral hazard problem is not trivial, it must be the case that, without insurance the consumer will take the action a1.Footnote 5 Thus, the consumer's prior reservation utility level is:

Under these assumptions, if the insurer wants to induce the consumer to implement the measures necessary to protect the insured property against theft, his problem may be stated as follows:

Program 1 Choose a policy (β, α) so as to maximize

subject to the individual rationality constraint

and the incentive compatibility constraint

Even though he ascribes the wrong beliefs to the consumer, the insurer nevertheless employs a representation of the consumer's prior preferences consistent with the consumer's choice behavior. Hence the optimal insurance policy (β*, α*) is the same whether the insurer solves Program 1 or the same optimization problem with the constraints representing the consumer's true beliefs and utilities as expressed in (1). Thus, insofar as the insurer is concerned, as long as the insurance policy (β*, α*) is in effect, the misspecification of the consumer's utilities and probabilities is of no consequence.

Remark In the literature dealing with principal–agent relationships, it is commonplace to invoke the “common prior” assumption. In the present context, this assumption is open to two interpretations: First, the “true beliefs” of the principal and the agent are in agreement (i.e., πI(·; a)=πc(·; a), a∈{a0, a1}). Second, the principal's perception of the agent's beliefs and his own beliefs agree (i.e., πI(·; a)=π̂c(·; a), a∈{a0, a1}). In the statement of the problem above and in the analysis that follows, the insurer is assigned the role of designing the insurance policies, anticipating consumer's responses. It is natural, therefore, to analyze the problem as seen by the insurer and, consequently, to adopt the second interpretation of the common prior assumption. I assume, henceforth, that the principal's perception of the agent's beliefs agrees with his own beliefs.

Posterior beliefs, preferences, and optimal insurance

Suppose that at the time the original policy is about to expire, new information, in the form of observation x, becomes available. For instance, the number of car thefts in the neighborhood where the consumer lives has been falling. The insurer must propose a new contract taking into account the new information. What are the terms of the new contract?

To begin with, observe that the new information affects the consumer's reservation utility level. Let πc(·; a, x) denote the subjective posterior distribution from the viewpoint of the consumer, obtained by applying Bayes’ rule to the prior π̂c(·; a). Denote by π̂c(θ; a, x) the insurer's perceived subjective posterior distribution of the consumer, obtained by applying Bayes’ rule to the prior distribution π̂c(·; a).Footnote 6 Then, the consumer's new reservation utility level is  , where

, where

However, the insurer's perception of the consumer's new reservation utility is ûc(x)=max{ûc(x, a0), ûc(x, a1)}, where

Suppose that the insurer still seeks to induce the agent to implement a1. The insurer's problem may be stated as follows:

Program 2 Choose a policy (β, α) so as to maximize

subject to the individual rationality constraint

and the incentive compatibility constraint

where πI(·; a, x) denotes the insurer's posterior probability distribution obtained by the application of Bayes’ rule.

Let the solution to Program 2 be denoted by (β**, α**).Footnote 7

The main issue

Consider next the situation from the viewpoint of the consumer. He will accept (β**, α**) if, under the corresponding best action, its expected utility exceeds his reservation utility. Formally, the policy (β**, α**) is acceptable to the consumer (that is, it is individually rational) if

where  ∈argmax{a1, a0}γ(θ)u(w+β**)πc(θ; ā, x)+u(w−α**)πc(θ′; ā, x).

∈argmax{a1, a0}γ(θ)u(w+β**)πc(θ; ā, x)+u(w−α**)πc(θ′; ā, x).

In view of the desire of the insurer to induce the consumer to implement the action a1, the insurance policy (β**, α**) is incentive compatible if

The main question of concern here is the following: Is it necessarily true that if (β**, α**) is individually rational it is also incentive compatible? Formally, is it necessarily true that if (β**, α**) satisfies (13) it must also satisfy (14)?

In the next section I show, by way of a numerical example, that it is possible that the optimal insurance policy (β**, α**) is individually rational but not incentive compatible. In other words, failing to ascribe to the agent the correct prior probabilities and utilities, the principal ascribes to the agent's posterior preference relations that are incorrect and, as a result, fails to provide the agent with the incentives that would have induced him to act in the principal's best interest.

An example

Prior beliefs, preferences, and optimal insurance

The consumer's prior beliefs and preferences

Consider a consumer facing a risk of property loss. Specifically, let the consumer's initial endowment be (w, w′)=($2,500; $10,000), so his loss due to accident or theft is $7,500. Let the consumer's von Neumann–Morgenstern utility functions be  and

and  , and let v0=0, v1=5.

, and let v0=0, v1=5.

Suppose that the consumer's joint probability distributions, quantifying his actual beliefs regarding the likelihoods of the events and observations conditional on his actions, are as follows:

Let the consumer's prior beliefs be represented by the marginal distributions on the events, θ and θ′, conditional on his actions. These are summarized as follows:

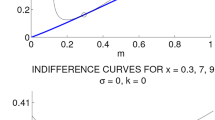

Hence, the consumer's prior preference relations conditional on his actions, ⪰ a c, a∈{a0, a1}, over the set of insurance policies (β, α) are represented by:

and

Note that Γ0=0.9, Γ1=0.95,Footnote 8 and the insurer's perception of the consumer's prior beliefs is π̂c(θ, a0)=(0.5 × 0.2)/0.9=0.111, π̂c(θ, a1)=(0.5 × 0.1)/0.95=0.526. Hence, the insurer's perception of the consumer's prior is consistent with the latter actual prior preferences. Moreover, the insurer's ascribes to the consumer's the reservation utility level, ûc=87.5, which is based on the correct perception that, in the absence of insurance, the consumer would take the necessary measures (i.e., the action a1) to protect his property.Footnote 9

The insurer's prior beliefs and preferences

Suppose that the insurer's joint probability distributions, representing his beliefs regarding the likelihoods of the events and observations conditional on the consumer's actions, are:

Hence, the insurer's prior beliefs are represented by πI(θ, a0)=0.111 and πI(θ, a1)=0.526. Note that πI(·; a)=π̂c(·; a), a∈{a0, a1}. Hence, the prior beliefs of the insurer and those he ascribes to the consumer are in agreement, which is consistent with the aforementioned interpretation of the common prior assumption. These prior beliefs are summarized in the following table:

The optimal prior insurance policy

Acting in his self-interest, the insurer will design a policy intended to induce the consumer to take measures to protect his property. This policy will be both individually rational (i.e., acceptable to the consumer) and incentive compatible.

If the insurer induces the consumer to implement the action a0, the incentive compatibility (henceforth, IC) constraint is nonbinding. the insurer's problem is to design a policy (β, α) that maximizes his expected profit, −0.111β+0.Hence 889α, subject to the individual rationality (henceforth, IR) constraint

Because the insurance is fair (no loading), the probability of loss is common to both parties, and the incentive issue does not arise, the optimal policy must provide full insurance. The solution is β=$6,952.1, α=$547.88 implying that the consumer's income is $9,452.1 regardless of the event. However, because he must meet the IR constraint, the insurer must sustain losses. In fact, the insurer's expected income is: −0.111 × 6952.1+0.889 × 547.88=−$284.62.

If the insurer were to induce the consumer to implement the action a, his problem is to design a policy that maximizes −0.0526β+0.9474α subject to the IR constraint

and the IC constraint

As both constraints are binding, the optimal solution is given by the solution of the system of equations

The solution is β*=$6,429.5, α*=$542.44. The insurer's expected income is: −0.0526 × 6429.5+0.9474 × 542.44=$175.72. Thus, the insurer offers the policy (β*, α*) anticipating, correctly, that the agent will accept the policy and implement the action a1.

Posterior preferences, beliefs, and insurance policy

The consumer's posterior beliefs and preferences given x

Let x be observed. The consumer's likelihood functions are qc(x∣θ, a0)=0.1, qc(x∣θ′, a0)=68/80=0.85, qc(x∣θ, a1)=0.1, and qc(x∣θ′, a1)=69/90=0.76667. Since the consumer is Bayesian, his posterior beliefs are represented by the posterior probabilitiesFootnote 10

The consumer's posterior preference relations, ⪰a, xc, on the set of insurance policies are represented by

and

His reservation utility is  (x)=97.857.Footnote 11

(x)=97.857.Footnote 11

The insurer's perception of the consumer's posterior beliefs and preferences given x

The insurer's likelihoods of x are qI(x∣θ, a0)=(0.020)/0.111=0.18018, qI(x∣θ′, a0)=(0.680)/(0.680+0.209)=0.7649, qI(x∣θ, a1)=(0.0050)/(0.0050+0.0476)=0.09506 and qI(x∣θ′, a1)=(0.695)/(0.695+0.2524)=0.7336. Thus, by Bayes’ rule, the insurer's beliefs and, by assumption, the beliefs he ascribes to the consumer are represented by the posterior probabilitiesFootnote 12

Based on these beliefs, the insurer ascribes to the consumer a posterior preference relations,  , on insurance policies and actions represented by

, on insurance policies and actions represented by

and

The insurer perceives the consumer's reservation utility level to be ûc(x)=89.664.Footnote 13

The posterior insurance policy given x

If the insurer were to induce the consumer to implement the action a1, his problem, as he perceives it, would be the following: Choose (β, α) so as to maximize −β × 0.0075+α(1−0.0075) subject to the IR constraint

and the IC constraint

In this case, the two constraints are binding. The optimal insurance policy is: β**=$7,249.4, α**=$69.28. The insurer's expected profit is: −7249.4 × 0.0075+(1−0.0075) × 69.28=$14.39.

If the insurer were to induce the consumer to implement the action a0, then the IC constraint would not be binding and the insurer's problem is to maximize −0.029β+(1−0.029)α subject to the IR constraint;

By the same argument, the optimal insurance policy provides for full insurance. The solution to this problem is: β=$7,425.5, α=$74.461, and the insurer expected profit is: −0.029 × $7,425.5+(1−0.029) × $74.461=−$143.04. Thus, the insurer is better off with the policy (β**, α**), believing that it induces the consumer to implement the action a1.

The posterior insurance policy is individually rational but incentive incompatible

Consider next the behavior of the consumer facing the contract β**=$7,249.4, α**=$69.28. The consumer evaluates the contract according to his true posterior preferences. Thus, his posterior expected utility conditional on implementing a1 is given by:Footnote 14

and his posterior expected utility conditional on implementing a0 is given by:Footnote 15

Thus, the consumer is better off implementing the action a0. Moreover, since 98.216=Uc((β**, α**), a0)> (x)=97.857, the policy (β**, α**) is individually rational.

(x)=97.857, the policy (β**, α**) is individually rational.

Hence, the optimal insurance policy perceived by the insurer is individually rational but incentive incompatible. In other words, the consumer will accept the policy (β**, α**) but, contrary to the insurer's expectations, will make no effort to protect the insured property.

The explanation for this result is that the insurer erroneously ascribes to the consumer action-dependent probabilities that exaggerate the impact of implementing the action a1. In other words, given the observation x, the insurer perceives that the consumer believes that by implementing a1 he reduces the probability of loss from 2.9 to 0.75 percent, whereas, in fact, the consumer believes that by implementing a1 he reduces the probability of loss from approximately 2.86 to 1.43 percent. As a result, the insurer underestimates the exposure to risk necessary to produce the incentives that would induce the consumer to implement a1. The insurer designs an insurance policy that, once accepted, reduces the consumer exposure to risk to the point at which the cost of taking precautions to avoid the loss covered by the insurance is no longer justified.

A new approach to modeling decision making under uncertainty

The analytical framework and the representation of the decision maker's prior preferences

In Karni (2006, 2007a, 2007b) I developed the theory underpinning the above discussion. Departing from Savage's (1954) analytical framework, this model is composed of the following concepts:

-

A finite set, Θ, of effects whose elements are uncertain outcomes. (In the example, these are the events θ and θ′.)

-

A set of actions, A, representing the means by which the decision maker believes he might influence the likelihood of the effects. (In the example actions correspond to negligent and diligent behavioral modes.) Generally speaking, in addition to affecting the likelihood of the effects, actions may affect the decision maker's well-being directly. For example, taking the necessary precautions to protect one's property may require effort and other costly resources.

-

A bet, b, is a real-valued function on Θ. (In the example, bets have the interpretation of insurance policies.) Let B denote the set of all bets.

The choice set is the product set  whose generic element, (a, b), an action–bet pair, represents conceivable alternatives among which decision makers may have to choose (e.g., taking out insurance and, simultaneously, deciding on the precautionary measures to protect against loss). A decision maker is characterized by a (prior) preference relation, ⪰ on ℂ, that has the usual interpretation and, as usual, the strict preference relation,

whose generic element, (a, b), an action–bet pair, represents conceivable alternatives among which decision makers may have to choose (e.g., taking out insurance and, simultaneously, deciding on the precautionary measures to protect against loss). A decision maker is characterized by a (prior) preference relation, ⪰ on ℂ, that has the usual interpretation and, as usual, the strict preference relation,  , and the indifference relation, ∼, are the asymmetric and symmetric parts of ⪰, respectively.

, and the indifference relation, ∼, are the asymmetric and symmetric parts of ⪰, respectively.

A key idea of this approach is the notion of constant-valuation bets. These are bets that, once accepted, leave the decision maker indifferent among all actions. Under a constant valuation bet, the direct utility costs associated with costlier actions are precisely compensated for by the improved chances of winning better outcomes these actions afford.Footnote 16 I assume that there exist constant-valuation bets b** and b* such that b** b* and, for every (a,b) ∈ ℂ, there is a constant-valuation bet b̄ satisfying (a, b)∼b̄.

b* and, for every (a,b) ∈ ℂ, there is a constant-valuation bet b̄ satisfying (a, b)∼b̄.

Using this analytical framework, Karni (2006) gives an axiomatization of the following representation of the decision maker's prior preferences:

where u is a continuous utility function on the set of consequences, ℝ times Θ; {π(·; a)∣a∈A} a family of action-dependent probability measures on the set of effects; and {fa:ℝ→ℝ∣a ∈ A} is a family of continuous strictly increasing functions. Moreover, u is unique; {f a }a∈A are unique up to a common, strictly increasing, transformation; and, for each a∈A, the probability measure π(·; a) is unique.

Several aspects of representation (15) are worth mentioning. First, analogously to states of nature in Savage's framework, the effects in this model are resolutions of the uncertainty surrounding the payoffs of the bets. However, unlike in Savage's theory, the factor resolving the uncertainty may be of direct concern to the decision maker. For instance, if the bet is a health insurance policy, the resolution of the uncertainty is the decision maker's state of health. The representation in (15) accommodates health-dependent risk attitudes or, more generally, effect-dependent risk attitudes. Effect-independent preferences constitute a special case of this representation.Footnote 17

Second, the functions f a that figure in the representation capture the cost of action. A specific functional form is the additive separable representation of the form ∑θ∈Θu(b(θ); θ)π(θ; a)+v(a), often invoked in the analysis of moral hazard problems.

Third, as in Savage's model, the uniqueness of the probabilities in (15) is predicated on an arbitrary normalization of the functions u and {f a }a∈A.Footnote 18 Consequently, there is no guarantee that the probabilities, π(·; a), correspond to the decision maker's beliefs.Footnote 19 Additional information is needed to identify the subjective probabilities that represent the decision maker's beliefs. This information may be obtained from the decision maker's posterior preferences.

Bayesian decision makers

Let X be a set whose elements are observations, representing information pertinent to the decision maker's beliefs regarding the likelihood of the alternative effects conditional on his actions. In the example, such information includes reported thefts and other evidence of property crimes in the neighborhood where the insured property is located. Decision makers are supposed to update their prior preferences if new information becomes available. Let ⪰ x denote the posterior preference relation on ℂ contingent on the observation x∈X.

A Bayesian decision maker is characterized by a prior preference relation, a set of posterior preference relations contingent on the observations, and the condition that the posterior preference relations are obtained from the prior preference relation by updating his subjective probabilities using Bayes’ rule (i.e., leaving intact the other functions that figure in the representation, such as the functions u and {f a }a∈A in the representation (15)). To model the behavior of Bayesian decision makers, it is necessary to formally incorporate new information into the analytical framework.

Consider a decision maker who, before choosing an action–bet pair, receives information that he deems pertinent to his assessment of the likelihoods of alternative effects conditional on his choice of action. In general, for every a∈A, the decision maker is supposed to have action-dependent subjective joint distributions π(·, ·∣a) on Θ × X. Let π(θ∣a), π(x∣a), and the likelihood functions q(x∣θ, a) be determined from π(·, ·∣a) by marginalization and conditioning.Footnote 20

A decision maker whose prior preference relation ⪰ on ℂ is represented by (15) is said to be Bayesian if his posterior preference relations {⪰ x }x∈X are represented by

where, for all x∈X and a∈A, π(θ∣x, a) is determined by the application of Bayes’ rule, namely,

In Karni (2007b) I show that, if the decision maker is Bayesian then the representation of his prior preferences admits a unique set of action-dependent probability measures representing the decision maker's true beliefs.

Concluding remarks

This analysis shows that predicting the choice of a Bayesian decision maker when new information becomes available requires knowing his true beliefs, not just his prior preferences. Failure to ascribe to the agent his true prior probabilities and utilities, even if the representation of the agent's prior preferences is correct, may result in attributing to him the wrong posterior preferences. In these kind of situations, as the example clearly shows, recontracting under new information may be individually rational and yet incentive incompatible.

The results of this analysis have several implications for the study of insurance markets. First, if the empirical distributions of losses contingent on the precautionary measures taken by the insured is known and is believed, by both the insurer and the insured, to be a correct representation of the risk, the problems raised here may be largely avoided. The probabilities ascribed to the agents are the empirical probabilities, and the utility functions may be effect-dependent.

Second, when the evidence does not permit the construction of a reliable empirical distributions, the insurer does not know the probabilities that represent the insured true beliefs. This means that the set of consumers that purchases a particular policy pools individuals for whom the incentives provided are insufficient to induce them to take the precautionary measures desired by the insurer, thus becoming high-risk types, and individuals for whom the incentive are sufficient, representing low-risk types. The result is that “pooling” contracts implicitly involve cross-subsidization among different risk groups. Moreover, because the insurer lacks the means to control the composition of individuals in the pool, if the insurance market is competitive, there will always be firms that live “on the edge,” because they unknowingly draw a “bad pool.” Insurers can reduce this risk through reinsurance. In general, in these kinds of situations, the incentives written into the insurance policies are excessive for some individuals. These individuals bear too much risk compared with the second-best risk allocation. By the same logic, other individuals, for whom the incentives are insufficient, bear too little risk relative to the second-best risk allocation.

Third, because the fundamental issue here is the hidden characteristics of the consumer – namely, his beliefs – it seems useful to analyze the situation as a special adverse selection problem. Such analysis, however, is beyond the scope of this paper.

Notes

Savage's axioms P3 and P4 captures the “state independence” aspect of the underlying preference relation.

This criticism applies to all known revealed-preference theories of choice under uncertainty that invoke Savage's notion of state-space, including the subjective expected utility model of Anscombe and Aumann (1963), as well as nonexpected utility theories, such as probabilistically sophisticated choice of Machina and Schmeidler (1992, 1995), and the maxmin expected utility model of Gilboa and Schmeidler (1989).

To simplify the notation, henceforth I shall write Γ(a i )=Γ i , i=0, 1.

See Grossman and Hart (1983) for a representation of this type.

If the consumer does not take the precautionary measures when exposed to the full risk, he will certainly not take these measures when covered by insurance and the moral-hazard issue is trivial.

7 This is where the depiction of the consumer as Bayesian decision maker and the insurer's awareness of this kick in. Implicitly, there are likelihood functions q(x∣θ, a) that are invoked by the consumer to update the beliefs. These are dealt with explicitly in the example below.

The solution (β**, α**), to insurance Program 2, is given by the solution to the following equations:

and

(see Salanier, 1997).

By definition, Γ0=0.5 × 0.2+0.8 and Γ1=0.5 × 0.1+0.9.

This is the perceived maximum of expected utility if the consumer forgoes taking out insurance and chooses the action a1. Note that, ûc(a1)=0.95(0.0526 × 50+(1−0.0526) × 100)−5=87.5, which is larger than ûc(a0)=0.9(0.111 × 50+(1−0.111) × 100)=85.0.

πc(θ∣x, a0)=(0.1 × 0.2)/(0.1 × 0.2+0.85 × 0.8)=0.028571, πc(θ∣x a1)=(0.1 × 0.1)/(0.1 × 0.1+0.76667 × 0.9)=0.014286.

Note that (

x, a1)=0.5 × 0.014286 × 50+(1−0.014286) × 100−5=93.929 and

x, a1)=0.5 × 0.014286 × 50+(1−0.014286) × 100−5=93.929 and  (x, a0)=0.5 × 0.0028571 × 50+(1−0.028571) × 100=97.857. Hence,

(x, a0)=0.5 × 0.0028571 × 50+(1−0.028571) × 100=97.857. Hence,  .

.πI(θ∣x, a0)=(0.18018 × 0.111)/(0.18018 × 0.111+0.7649 × (1−0.111))=0.029 and πI(θ∣x, a1)=(0.09506 × 0.0526)/(0.09506 × 0.0526+0.7336 × (1−0.09506))=0.0075.

Note that ûc(x, a0)=0.9 × (0.029 × 50+(1−0.029) × 100)=88.695 which is smaller than ûc(x, a1)=0.95 × (0.0075 × 50+(1−0.0075) × 100)−5=89.644. Hence, ûc(x)=max{ûc(x, a0),ûc(x, a1)}=89.644.

Formally, let I(a; b)={b′∈B∣(a, b′)∼(a, b)}. A bet b̄ is said to be a constant-valuation bet if (a, b̄)∼(a′, b̄) for all a, a′∈A, and ∩ a ∈ A I(a; b̄)={b̄}.

For details, see Karni (2006).

The normalization consists of assigning b** and b* utilities as follows: u(b*(θ),θ)=0 for all θ∈Θ, and ∑ θ ∈Θu(b**(θ), θ)π(θ; a)=1, a∈A. Then, for all a∈A, f a (1)=1 and f a (0)=0.

To see this, fix a nonconstant function

and let Γ(a)=∑

θ

∈Θγ(θ)π(θ; a), û(b(θ); θ)=u(b(θ); θ)/γ(θ), π̂(θ; a)=γ(θ)π(θ; a)/Γ(a), and f̂

a

=f

a

oΓ(a). Then, by (15), the prior preference relation ⪰ is represented by

and let Γ(a)=∑

θ

∈Θγ(θ)π(θ; a), û(b(θ); θ)=u(b(θ); θ)/γ(θ), π̂(θ; a)=γ(θ)π(θ; a)/Γ(a), and f̂

a

=f

a

oΓ(a). Then, by (15), the prior preference relation ⪰ is represented by

But π̂(θ; a)≠π(θ; a) for some a and θ. Therefore, the prior subjective probabilities are not unique.

That is, for every a∈A, π(θ∣a)=∑ x ∈ X π(θ, x∣a), π(x∣a)=∑ θ ∈Θπ(θ, x∣a), and q(x∣θ, a)=π(θ, x∣a)/π(θ∣a).

References

Anscombe, F. and Aumann, R.J. (1963) ‘A definition of subjective-probability’, Annals of Mathematical Statistics 34: 199–205.

de Finetti, B. (1937) ‘La prévision: Ses lois logiques, ses sources subjectives’, Annals de l’Institute Henri Poincare 7: 1–68. (English translation, by H.E. Kyburg, appears in H.E. Kyburg and H.E. Smokler (eds) (1964) Studies in Subjective Probabilities. New York. John Wiley and Sons.).

Gilboa, I. and Schmeidler, D. (1989) ‘Maxmin expected utility with non-unique prior’, Journal of Mathematical Economics 18: 141–153.

Grant, S. and Karni, E. (2005) ‘Why does it matter that beliefs and valuations be correctly represented?’, International Economic Review 46: 917–934.

Grossman, S. and Hart, O. (1983) ‘An analysis of the principal-agent problem’, Econometrica 51: 7–45.

Karni, E. (2006) ‘Subjective expected utility theory without states of the world’, Journal of Mathematical Economics 42: 325–342.

Karni, E. (2007a) ‘Agency theory: Choice-based foundations of the parametrized distribution formulation’, Economic Theory, online publication (doi:10.1007/s00199-007-0277-9).

Karni, E. (2007b) ‘Subjective expected utility theory and the representation of beliefs’, unpublished manuscript.

Machina, M.J. and Schmeidler, D. (1992) ‘A more robust definition of subjective probability’, Econometrica 60: 745–780.

Machina, M.J. and Schmeidler, D. (1995) ‘Bayes without Bernoulli: simple conditions for probabilistically sophisticated choice’, Journal of Economic Theory 67: 106–128.

Ramsey, F.P. (1927) ‘Truth and probability’, in R.B. Braithwaite and F. Plumpton (eds) (1931) The Foundations of Mathematics and Other Logical Essays, London: K. Paul, Trench, Truber and Co.

Salanier, B. (1997) The Economics of Contracts, Cambridge, MA: MIT Press.

Savage, L.J. (1954) The Foundations of Statistics, New York: John Wiley and Sons.

von Neumann, J. and Morgenstern, O. (1944) Games and Economic Behavior, Princeton, NJ: Princeton University Press.

Author information

Authors and Affiliations

Additional information

This paper is the 19th Geneva risk Economics Lecture delivered to the 34th Seminar of the European Group of Risk and Insurance Economists (EGRIE), 2007 in Cologne, Germany.

Grant and Karni (2005) studies a principal–agent problem in which misconstrued probabilities and utilities lead the principal to offer the agent a contract that is acceptable yet incentive incompatible. However, unlike in this paper, Grant and Karni assume that the principal does not know the agent's prior preferences (in particular, the agent's evaluation of the disutility of undertaking the effort-demanding action). Moreover, Grant and Karni did not consider the issue of updating the agent's preferences.

Rights and permissions

About this article

Cite this article

Karni, E. On Optimal Insurance in the Presence of Moral Hazard. Geneva Risk Insur Rev 33, 1–18 (2008). https://doi.org/10.1057/grir.2008.7

Published:

Issue Date:

DOI: https://doi.org/10.1057/grir.2008.7

x, a1)=0.5 × 0.014286 × 50+(1−0.014286) × 100−5=93.929 and

x, a1)=0.5 × 0.014286 × 50+(1−0.014286) × 100−5=93.929 and  (x, a0)=0.5 × 0.0028571 × 50+(1−0.028571) × 100=97.857. Hence,

(x, a0)=0.5 × 0.0028571 × 50+(1−0.028571) × 100=97.857. Hence,  .

.

and let Γ(a)=∑

θ

∈Θγ(θ)π(θ; a), û(b(θ); θ)=u(b(θ); θ)/γ(θ), π̂(θ; a)=γ(θ)π(θ; a)/Γ(a), and f̂

a

=f

a

oΓ(a). Then, by

and let Γ(a)=∑

θ

∈Θγ(θ)π(θ; a), û(b(θ); θ)=u(b(θ); θ)/γ(θ), π̂(θ; a)=γ(θ)π(θ; a)/Γ(a), and f̂

a

=f

a

oΓ(a). Then, by