Abstract

Network coding is an emerging telecommunication technique, where any intermediate node is allowed to recombine incoming data if necessary. This technique helps to increase the throughput, however, very likely at the cost of huge amount of computational overhead, due to the packet recombination performed (ie coding operations). Hence, it is of practical importance to reduce coding operations while retaining the benefits that network coding brings to us. In this paper, we propose a novel evolutionary algorithm (EA) to minimize the amount of coding operations involved. Different from the state-of-the-art EAs which all use binary encodings for the problem, our EA is based on path-oriented encoding. In this new encoding scheme, each chromosome is represented by a union of paths originating from the source and terminating at one of the receivers. Employing path-oriented encoding leads to a search space where all solutions are feasible, which fundamentally facilitates more efficient search of EAs. Based on the new encoding, we develop three basic operators, that is, initialization, crossover and mutation. In addition, we design a local search operator to improve the solution quality and hence the performance of our EA. The simulation results demonstrate that our EA significantly outperforms the state-of-the-art algorithms in terms of global exploration and computational time.

Similar content being viewed by others

1. Introduction

Network coding is a new routing paradigm, where each intermediate node is not only able to forward the incoming data but also allowed to mathematically recombine (code) them if necessary (Ahlswede et al, 2000; Li et al, 2003). In essence, by introducing extra computations at intermediate nodes, network coding can efficiently make use of the bandwidth resource of a network and accommodate more information flows than traditional routing (Li et al, 2003). Multicast is a routing scheme for one-to-many data transmission, where the same information is delivered from a source to a set of receivers simultaneously (Miller, 1998). When applied in multicast, network coding can always guarantee a theoretically maximal throughput (Ahlswede et al, 2000; Li et al, 2003). However, performing coding operations will consume extra computational overhead and buffers. Hence, a natural concern is how to route the data from the source to all receivers at the expected data rate while minimizing the number of coding operations necessarily performed.

The above problem is NP-hard (Kim et al, 2006) and a number of evolutionary algorithms (EAs) have been proposed (see Section 2.2), where all of them adopt binary encodings to represent chromosomes (see Section 3.2). However, it is observed in this paper that a major weakness of these encodings is that the search space will include a large proportion of infeasible solutions. These solutions are potential barriers during the search and may significantly deteriorate the performance of EAs. This motivates us to investigate a more suitable encoding approach for EAs to effectively address the problem concerned.

In telecommunications, EAs are widely used as an optimizer to select appropriate routes within limited time. When designing EAs, path-oriented encoding is a direct and natural choice since routing itself is to select paths in a network along which the traffic is delivered. In the literature, path-oriented encoding has been adopted by EAs for solving shortest path routing and multicast routing problems. A number of GAs (Ahn and Ramakrishna, 2002; Cheng and Yang, 2010a, 2010b; Yang et al, 2010) are employed to find the cost-optimal path connecting the given source and receiver. Each chromosome is represented by a path containing a string of IDs of nodes through which the path passes. Also, EAs are used to construct least-cost spanning trees, where each chromosome is represented by a set of paths from the source to receivers (Palmer and Kershenbaum, 1994; Siregar et al, 2005; Oh et al, 2006; Cheng and Yang, 2008, 2010b). Similar to constructing a spanning tree, network coding-based multicast (NCM) finds a subgraph which owns multiple paths. Hence, path-oriented encoding could be a potential choice as the chromosome representation to the network coding resource minimization problem. However, to our knowledge no research in the literature concerns path-oriented encoding for the problem concerned.

In this paper, we propose an EA using path-oriented encoding to address the network coding resource minimization problem. In this EA, a chromosome is comprised of d basic units (BUs), where d is the number of receivers. Each BU consists of a set of paths connecting the source and a certain receiver, and do not share any common link. The number of paths in each BU is the same, that is, the data rate R. We develop three genetic operators, that is, initialization, crossover and mutation based on the proposed path-oriented encoding. In the initialization, an allelic BU pool is generated for each receiver. Then, each chromosome in the population is created by randomly selecting one BU for each receiver. To explore the search space we use a single-point crossover that operates upon BUs without damaging the structure of any BU. In mutation, a max-flow algorithm is carried out on a BU of a chromosome, chosen based on the mutation probability that is associated with the number of receivers, d. In addition to these genetic operators, we also develop a problem-specific local search operator to improve solution quality and avoid prematurity. Experimental results show that the path-oriented encoding EA is capable of finding optimal solutions in most of the test instances within a very short time, and the proposed EA outperforms the existing EAs due to the new encoding and the well-designed associated operators.

2. Problem formulation and related work

2.1. Problem formulation

In this paper, a communication network is modelled as a directed graph G=(V, E), where V and E are the node set and link set, respectively. Assume each link e∈E is with a unit capacity. Only integer flows are allowed in G; hence, a link is either idle or occupied by a flow of unit rate (Kim et al, 2007a, 2007b). An NCM request can be defined as a source s∈V expects to send the same data to a number of receivers T={t 1, …, t d }⊂V at rate R, where R is an integer (Xing and Qu, 2012, 2013). Each receiver t∈T can receive the data sent from the source at rate R (Kim et al, 2007a, 2007b).

Given an NCM request, the task is to find a connected subgraph in G to support the multicast with network coding (Xing and Qu, 2012, 2013). This subgraph is called NCM subgraph (denoted by G NCM). In an NCM subgraph, there are R link-disjoint paths connecting s and each receiver; a coding node is a node that performs coding operations; an outgoing link of a coding node is called a coding link if the data sent out via this link are a combination of the data received by the coding node. In network G, a non-receiver intermediate node with multiple incoming links is referred to as a merging node (Kim et al, 2007a, 2007b). Only merging nodes are possible to become coding nodes. The number of coding links is used to estimate the amount of coding operations performed during the data transmission (Langberg et al, 2006). More descriptions can be found in Xing and Qu (2012). The following lists some notations.

- M G ::

-

the set of merging nodes in G, where m∈M G is an arbitrary merging node in G

- L m ::

-

the set of outgoing links of merging node m, where e∈L m is an arbitrary outgoing link of node m

- σ e ::

-

a binary variable associated with each link e∈L m , ∀m∈M G . σ e =1 if link e is a coding link; σ e =0 otherwise

- Φ(G NCM)::

-

the number of coding links in the NCM subgraph

- R(s, t k )::

-

the data rate between s and t k in the NCM subgraph

- p i (s, t k )::

-

the ith link-disjoint path from s to t k in G NCM, i=1, 2, …, R

The network coding resource minimization problem is defined as to find an NCM subgraph with the minimum number of coding links while satisfying the data rate R, shown as follows:

Minimize:

Subject to:

Objective (1) defines the optimization problem as to minimize the number of coding links; Constraint (2) defines the achievable rate from s and each receiver as exactly R in the NCM subgraph, also indicating that there are R link-disjoint paths between the source and each receiver.

2.2. Related work

By far, a number of EAs have been proposed for solving the minimization problem. These EAs can be classified into four categories, that is, genetic algorithms (GAs), estimation of distribution algorithms (EDAs), EAs with efficiency enhancement techniques, and hybridized EAs.

Kim et al developed several GAs to minimize the involved network coding resource. The first GA was only applicable to acyclic networks (Kim et al, 2006). Then, a distributed GA was designed for both acyclic and cyclic networks, where a graph decomposition method (see Section 3.1) was proposed to map the target problem to an EA framework (Kim et al, 2007a). Besides, two binary encoding approaches, that is, the binary link state (BLS) and the block transmission state (BTS), and their associated operators were evaluated (Kim et al, 2007b) (see Section 3.2).

EDAs have also been used to solve the problem. They maintain one or more probability vectors, rather than a population of explicit solutions. The probability vectors, when sampled, will generate promising solutions with increasingly higher probabilities during the evolution. So far, quantum-inspired evolutionary algorithm (QEAs) and population-based incremental learning algorithm (PBIL) have been developed to optimize the problem concerned (Xing et al, 2010; Ji and Xing, 2011; Xing and Qu, 2011a, 2011b).

In addition, Ahn (2011) and Luong et al (2012) studied the minimum-cost network coding problem using evolutionary approaches, where entropy-based evaluation relaxation techniques were introduced to EAs in order to reduce the computational cost incurred during the evolution. By making use of the inherent randomness feature of the individuals, the proposed EAs can rapidly recognize promising solutions with much fewer individuals to be evaluated.

Xing and Qu (2012) proposed a hybridized EA, where a local search procedure is designed and incorporated. Strong global exploration and local exploitation capabilities can both be obtained during the evolution.

Note that all the EAs above adopt binary encodings to represent chromosomes. However, these encodings have their intrinsic drawback as the search space may contain many infeasible solutions that would significantly increase the difficulty of tackling the problem. It is hence worth designing a more appropriate encoding scheme for EAs to effectively address the problem.

3. The proposed evolutionary algorithm

We first review the graph decomposition method based on which the path-oriented encoding is designed. We then review the existing encodings for network coding resource minimization, that is, the BLS and the BTS. After that, we describe the new encoding, its associated operators and the overall procedure of the proposed EA.

3.1. The graph decomposition method

The graph decomposition method is a means of explicitly showing how information flows pass through merging nodes in network G. This method decomposes each merging node into a number of auxiliary nodes, as described below (Kim et al, 2007a, 2007b).

Suppose merging node i owns In(i) incoming links and Out(i) outgoing links. This node is decomposed into two node sets: In(i) incoming auxiliary nodes and Out(i) outgoing auxiliary nodes. Each incoming link of i is redirected to the corresponding incoming auxiliary node and each outgoing link of i is redirected to the corresponding outgoing auxiliary node. In addition, an auxiliary link is inserted between arbitrary pair of incoming and outgoing auxiliary nodes. Figure 1 shows an example of the graph decomposition. The original graph with source s and receivers t 1 and t 2 is shown in Figure 1(a), where v 1 and v 2 are merging nodes. Figure 1(b) illustrates the decomposed graph, where eight auxiliary links are inserted, showing all possible routes that information flows may pass through v 1 and v 2.

3.2. The BLS and BTS encodings

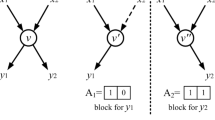

BLS and BTS are the only two existing encoding methods in the literature for the problem concerned (Kim et al, 2007a, 2007b). They are based on the graph decomposition method. For an arbitrary merging node with In incoming links and Out outgoing links, there are In auxiliary links heading to each outgoing auxiliary node after graph decomposition, for example, links u 1→w 1 and u 2→w 1 connect w 1 and links u 1→w 2 and u 2→w 2 connect w 2, as shown in Figure 1(b). Each auxiliary link can be either active or inactive, indicating whether the link allows flow to pass.

Assume there are OAN outgoing auxiliary nodes in the decomposed graph G D, where OAN is an integer. In BLS, a chromosome (solution) X consists of a number of binary arrays b i , i=1, 2, …, OAN, each determining the states of the auxiliary links heading to a certain outgoing auxiliary node in G D. In BTS, the chromosome representation is the same as that in the BLS encoding. However, for each array b i in BTS-based chromosome, once there are at least two 1’s in b i , the remaining 0’s in b i are replaced with 1’s.

Using BLS or BTS encoding has two disadvantages. First, the search space contains a considerable amount of infeasible solutions (see Section 4.2). As aforementioned, how flows pass the merging nodes is determined by the states of all incoming auxiliary links in G D. If many of the incoming auxiliary links are inactive (ie many 0’s in chromosome), it is very likely to lead to an infeasible solution. Infeasible solutions are barriers that disconnect feasible regions in the search space and decrease the search efficiency of EAs. Second, the evaluation procedure is complex and indirect, requiring a number of processing steps, that is, chromosome X→G D→G NCM→f(X). Meanwhile, the computational overhead involved in the step G D→G NCM is quite high due to the calculations of the max-flow between the source and each receiver t k ∈T. The two drawbacks motivate us to devise a more efficient encoding to represent the solutions to the problem concerned.

3.3. The path-oriented encoding and evaluation

In this paper, we adapt the path-oriented encoding within our proposed EA. Each chromosome consists of a set of paths originating from the source and terminating at one of the receivers. Each path is encoded as a string of positive integers representing the IDs of nodes through which the data pass. The set of paths is grouped into d subsets, that is, d BU, where paths in BU connect to the same receiver and they do not share any common link (ie they are link-disjoint). Besides, there are R paths in each BU, where R is the expected data rate. Each chromosome is feasible since each BU of the chromosome satisfies the data rate requirement. Each BU can be easily obtained by max-flow algorithms. For example, we find a NCM subgraph from Figure 1(b), which consists of four paths, as shown below.

The corresponding chromosome is illustrated in Figure 2.

Based on the path-oriented encoding, the chromosome evaluation is simple. For chromosome X, the union of all paths in X forms the corresponding NCM subgraph. The fitness of X, f(X), is known by counting the number of coding links used in the NCM subgraph. So, the computation complexity here is significantly lower than that of BLS and BTS encodings.

Compared with BLS and BTS, path-oriented encoding has two advantages. First, for any instance, the search space consists of feasible solutions only. This leads to a connected search space, and thus helps to reduce the problem difficulty for EAs. Second, the chromosome evaluation is less time-consuming.

3.4. Initialization

It is widely recognized that, for EAs, a good initial population is more likely to lead to a better optimization result. For the proposed algorithm, we initialize the population in the following way. First, we create an allelic BU pool (pool-i) for each receiver t i , where i=1, …, d. Second, we randomly choose one BU from pool-i, i=1, …, d, and combine the selected BUs as a chromosome. The second step is repeated to create a population of a predefined size.

Let pop be the population size and G D be the decomposed graph. Let R denote the expected data rate and hence each BU contains R link-disjoint paths. Let Flow(s, t i ) and Vol(s, t i ) be the max-flow (made of link-disjoint paths) and its volume from s to receiver t i , respectively. The max-flow algorithm (Goldberg, 1985) is used to calculate Flow(s, t i ) and Vol(s, t i ). Figure 3 shows the initialization procedure of our EA based on the path-oriented encoding.

For a specific graph G temp, only one BU can be obtained by the max-flow algorithm. To obtain an allelic BU pool for receiver t i , we have to change the structure of G temp by deleting different links from G D at each time. As aforementioned, how the information flows pass through a given network depends on the states of all auxiliary links in the decomposed network. So, only the auxiliary links are considered for deletion in our EA. To generate a new BU for receiver t i , we randomly pick up a BU from pool-i and randomly select an auxiliary link owned by the BU, as shown in steps 9 and 10. The selected link is then removed from G temp to make sure the new G temp is a different graph.

3.5. Crossover

In the proposed EA, we use single-point crossover to each pair of selected chromosomes with a crossover probability p c. As aforementioned, there are d BUs in a chromosome. The crossover point is randomly chosen from the (d−1) positions between two consecutive BUs. Two offspring are created by swapping the BUs of the two parents after the crossover point. An example crossover operation is illustrated in Figure 4, where each parent consists of four BUs and the crossover point is between the second and third BUs.

First, the proposed crossover does not destroy any BU. So, after crossover, the offspring are all feasible to warrantee a connected search space. No repair is required, which is usually needed in EAs based on the BLS and BTS encodings. Second, the genetic information of the parents could be mixed and spread over offspring chromosomes so that new regions in search space are explored.

3.6. Mutation

Mutation is to help the local exploitation and avoid the prematurity of EAs. As mentioned in Section 3.3, each BU is a set of R link-disjoint paths from the source to a particular receiver. Mutation upon a BU leads to another set of R link-disjoint paths. The idea behind the mutation is that some auxiliary links owned by the chosen BU are deleted from the secondary graph G D. Then, the new BU is generated by implementing the max-flow algorithm on the new G D. We propose two mutation operators, the ordinary mutation M 1 and greedy mutation M 2, where each BU of a chromosome is to be mutated with a mutation probability p m. The difference between M 1 and M 2 is on which links in the chosen BU are deleted. In this paper, we only concern the removal of auxiliary links since they determine the amount of coding resources required.

In M 1, for a chosen BU, we randomly select an auxiliary link in the BU and delete the link from the decomposed graph G D. After that, we compute the max-flow, that is, Flow(s, t i ), by using the max-flow algorithm on G D (Goldberg, 1985). If the volume of Flow(s, t i ), Vol(s, t i ), is not smaller than the expected data rate R, a new BU is obtained by randomly selecting R paths in Flow(s, t i ). The new BU then replaces the old BU. If Vol(s, t i ) is smaller than R, the data rate requirement cannot be met and the old BU remains. The procedure of M 1 is shown in Figure 5, where rnd() generates a random value uniformly distributed in the range [0,1]. Figure 6 shows an example of BU mutation using M 1, where the example network G and its decomposed network G D are illustrated in Figure 1. Note that links u 2→w 1 and u 3→w 3 are the only auxiliary links in the chosen BU. In the example, link u 3→w 3 is removed from G D and a new BU is found based on the new G D.

In M 1, a random auxiliary link is deleted from G D to compute a new BU. The new BU, combined with the remaining (d−1) BUs of the chromosome, may lead to an increased number of coding links. This is because no domain knowledge is taken into consideration in M 1. To avoid this we propose the greedy mutation M 2 which is the same as M 1 except the way of which auxiliary links are chosen to be deleted.

In M 2, when deleting auxiliary links from G D, we concern not only the chosen BU but also the remaining (d−1) BUs. A random auxiliary link owned by the chosen BU is deleted from G D to make sure that a new different BU is introduced. We also delete in G D those unoccupied auxiliary links which connect to one of the outgoing auxiliary nodes being occupied by the remaining (d−1) BUs, to make sure that no additional coding links are introduced after M 2. One advantage of M 2 is that the fitness value of a chromosome tends to be smaller after mutation. However, M 2 may lead the search to local optima.

Regarding the mutation probability p m, a fixed value may not be a wise choice since the number of BUs in a chromosome changes according to d, that is, the number of receivers. A fixed p m value, for example 0.1, could lead to a dramatically different number of mutation operations during the evolution, which may not be generally applicable for different multicast sessions. In our EA, we set p m=1/d; hence more likely to lead to a stable optimization performance of EA.

3.7. The local search operator

To enhance local exploitation, we propose a local search (LS) operator that is performed on a randomly selected chromosome at each generation.

The aim of this operator is to revise some BUs of the selected chromosome to gradually reduce the number of coding links involved in the multicast. Note that each outgoing link of a merging node is redirected to an outgoing auxiliary node after the graph decomposition, as discussed in Section 3.1. So in the NCM subgraph of an arbitrary chromosome, each coding link corresponds to a certain coding node (ie an outgoing auxiliary node that performs coding). To reduce the number of coding nodes is to decrease the number of coding links. Assume there is a chromosome X of which the NCM subgraph contains K coding nodes, where K is an integer. The LS operator aims to remove the occurrence of coding operation at each coding node. The K coding nodes will be processed one by one, in an ascending order according to their node IDs.

We assume the kth coding node (denoted by cnode-k, k=1, 2, …, K) is to be processed by the LS operator. We also assume that there are C (C⩾2) auxiliary links connecting to cnode-k in the NCM subgraph of X, meaning information via these links is involved in the coding at cnode-k. To remove the coding from cnode-k, one simple idea is to delete arbitrarily (C−1) auxiliary links from the NCM subgraph of X. However, directly removing these links leads to an infeasible X since BUs which occupy these (C−1) links are damaged. To overcome this, our LS operator reconstructs the affected BUs so that they bypass the use of the (C−1) auxiliary links mentioned above, explained as follows.

First of all, we randomly select (C−1) auxiliary links connecting to cnode-k and check which BUs are occupying these links. The affected BUs will be reconstructed, while the others remain in the NCM subgraph. Next, we delete the selected (C−1) auxiliary links from the decomposed graph G D. Besides, we also delete those currently unoccupied auxiliary links from G D which connect to one of the outgoing auxiliary nodes being occupied by the unaffected BUs. The reason to remove the unoccupied auxiliary links is that we expect to reduce the chance of removing one coding node at the expense of introducing other coding node(s). Finally, we reconstruct the affected BUs by using the max-flow algorithm over G D. If all the affected BUs are successfully constructed, we obtain a new chromosome X new. If X new owns less coding links than X, we replace the incumbent X with X new (ie the LS moves to an improved solution X new) and repeat the LS operator to improve the new incumbent X new. Otherwise, we retain X and proceed to the next coding node of X. The LS operator stops when either no improvement is made to the incumbent chromosome after checking all its coding nodes, or a new chromosome with no coding involved (ie optimal) is found.

An example LS is shown in Figure 7, where Figure 1(a) is the example network. The example NCM subgraph G NCM consists of two BUs, that is, BU1={s→a→t 1, s→b→u 2→w 2→d→u 4→w 3→t 1} and BU2={s→b→t 2, s→a→u 1→w 2→d→u 4→w 4→t 2}, as seen in Figure 7(a). Obviously, node w 2 is the only coding node in G NCM. According to the rule of LS, one of the incoming auxiliary links, that is u 1→w 2 and u 2→w 2, needs to be removed from G D. In the example, link u 1→w 2 is chosen for removal and hence the affected BU, that is BU2, has to be reconstructed. Besides, as auxiliary nodes w 2 and w 3 are currently occupied by BU1, all unoccupied auxiliary links heading to w 2 and w 3 also need to be deleted from G D. So, link u 3→w 3 is deleted. Based on the new G D, a new BU2={s→b→t 2, s→a→u 1→w 1→c→u 3→w 4→t 2} is rebuilt, as shown in Figure 7(c). It is easily seen that the combination of BU1 and BU2 results into an NCM subgraph without coding operation. Hence, the LS procedure stops and returns the resulting NCM subgraph.

The LS operator is useful to improve the solution-quality (ie better fitness) of the selected chromosome. Also, it changes the structure of the chromosome. Hence, the new chromosome may also help to increase the population diversity. The evaluation of the LS operator is discussed in Section 4.7.

3.8. The overall procedure of the proposed EA

The procedure of the proposed EA is shown in Figure 8. The evaluation of chromosome X i (t) (in step 4) is simple. In G D, we mark those nodes and links being occupied by the BUs in X i (t). The union of the marked nodes and links forms the NCM subgraph G NCM of X i (t). The number of coding links in G NCM, that is Φ(G NCM), is assigned to X i (t) as its fitness. In step 8, tournament selection (Mitchell, 1996) is adopted in our proposed EA. The tournament size is set to 2, which is a typical setting for EAs. In step 9, the elitism scheme is used to preserve the best-so-far chromosome. In step 11, either the ordinary mutation or the greedy mutation can be used here. The termination conditions are that, either the EA has found a chromosome of which the NCM subgraph has no coding link, or EA has evolved a predefined number of generations.

4. Performance evaluation

In this section, we first introduce the test instances used to evaluate the performance of the proposed EA (we hereafter call it pEA). We then investigate the deficiency of BLS and BTS encodings. After that we study the effectiveness of the crossover and mutation of pEA, and compare EAs with path-oriented, BLS and BTS encodings. The LS operator is studied next. Finally, we compare pEA with the existing EAs in terms of optimization performance and computational time.

4.1. Test instances

We consider 14 test instances, four on fixed networks and 10 on randomly generated networks. The four fixed networks are 3-copy, 7-copy, 15-copy and 31-copy networks which have been used to test the performance of EAs for a number of network coding-based optimization problems (Kim et al, 2007b; Xing and Qu, 2011a, 2012, 2013). Figure 9 illustrates an example of n-copy network, where Figure 9(b) is a 3-copy network constructed by cascading three copies of the original network in Figure 9(a). In an n-copy network, the source is the node on the top and the receivers are at the bottom. The n-copy network has n+1 receivers to which data rate from the source is 2. We hereafter call 3-copy, 7-copy, 15-copy and 31-copy networks as Fix-1, Fix-2, Fix-3 and Fix-4 networks, respectively. The 10 random networks (Rnd-i, i=1, …, 10) are directed networks with 20-60 nodes. Table 1 shows the 14 instances and their parameters. To encourage scientific comparisons, all instances are provided at http://www.cs.nott.ac.uk/~rxq/benchmarks.htm. The predefined number of generations for all algorithms tested is set to 200. All experiments were run on a Windows XP computer with Intel(R) Core(TM)2 Duo CPU E8400 3.0 GHz, 2 GB RAM. The results are achieved by running each algorithm 50 times.

4.2. Deficiency of BLS and BTS encodings

Different encoding approaches could greatly affect the performance of EAs (Mitchell, 1996). The resulting search spaces may be significantly different with respect to not only the size but also the structure and connectivity of the underlying landscape. As discussed in Section 3.2, in theory, the search space of BLS or BTS encoding may contain many infeasible solutions. The solutions are thus scattered in disconnected feasible regions in the search space. The connectivity among feasible solutions may be so weak that to find optimal solution(s) by EAs becomes extremely difficult.

In this section, we statistically measure the proportion of infeasible solutions (PIS) over search space by randomly sampling. Table 2 shows the results of PIS over 10 000 samples for each instance. For all instances, the PIS values are more than 99%. In particular, in Fix-2,3,4 and Rand-5,7,8,9,10, the PISs of BLS and BTS are always 100%, meaning that all samples are infeasible solutions that constitute the majority of the search space. Large amount of infeasible solutions could disconnect feasible regions in the search space and dramatically increase the problem difficulty for search algorithms. Hence, the BLS and BTS encodings are not appropriate encoding schemes for our target problem.

4.3. Performance measures

To show the performance of pEA in various aspects, such as the optimal solution obtained, the convergence characteristic, and the consumed running time, the following performance metrics are used throughout Section 4.

-

Mean and standard deviation (SD) of the best solutions found over 50 runs. One best solution is obtained in one run. The mean and SD are important metrics to show the overall performance of a search algorithm.

-

Student’s t-test (Yang and Yao, 2005; Walpole et al, 2007) to compare two algorithms (eg A1 and A2) in terms of the fitness values of the 50 best solutions obtained. In this paper, two-tailed t-test with 98 degrees of freedom at a 0.05 level of significance is used. The t-test result can show statistically if the performance of A1 is better than, worse than, or equivalent to that of A2.

-

Successful ratio (SR) of finding an optimal solution in 50 runs. The successful ratio, to some extent, reflects the global exploration ability of an EA to find optimal solutions.

-

Evolution of the best fitness averaged over 50 runs. The plot of the evolution illustrates the convergence process of an algorithm.

-

Average computational time (ACT) consumed by an algorithm over 50 runs. This metric is a direct indication of the time complexity of an algorithm.

4.4. The effectiveness of crossover in pEA

As mentioned in Section 3.5, the single-point crossover is used in pEA. We investigate the feasibility of this operator and the impact of different settings of the crossover probability p c on the performance of pEA. Mutation and LS operator is excluded in pEA in this experiment. We set the population size pop=20 and compare the performance of pEA with four different p c, that is 0.0, 0.3, 0.6 and 0.9, where p c=0.0 means the algorithm stops after initialization since no crossover is involved. By comparing the results of different p c and those of p c=0.0, one could see the effectiveness of the crossover.

The results of the mean and standard deviation of pEA with different p c are shown in Table 3. It can be seen that pEA with crossover performs better than pEA without crossover in each instance, indicating crossover can properly drive the evolution process. Besides, we find with larger p c the mean and SD become increasingly better. The variant of pEA with p c=0.9 performs the best, showing that rapid exchange of genetic information over different chromosomes helps to explore different areas in the search space. However, we may also find that there remain big gaps between the best solutions obtained by pEA with only crossover and the optimal solutions in each instance. This is mainly because employing crossover only is not enough to guide pEA to escape from local optima. We need mutation to enhance local exploitation and avoid prematurity.

4.5. The effectiveness of mutation in pEA

We propose two mutation operators with p m=1/d in Section 3.6, that is, the ordinary mutation M 1 and greedy mutation M 2, where d is the number of receivers. To mutate a BU, M 1 deletes a random auxiliary link of the BU from G D while M 2 deletes a random auxiliary link of the BU and a number of unoccupied auxiliary links from G D. The removal of the random link is to make sure that the mutated BU is different from the old one. Besides, the removal of those unoccupied links is to ensure no extra coding link will be introduced after mutation.

In the following experiment, we compare the performance of pEA with the proposed crossover and different mutations. The comparison between M 1 and M 2 can show whether the removal of those unoccupied auxiliary links helps to improve the performance of pEA. When comparing M 1 and M 2, we also study the impact of different p m, that is 2/d, 1/d and 0.5/d. Let M 1(p m) and M 2(p m) denote the two mutations with p m, respectively. In the experiment, LS operator is excluded. We set pop=20 and p c=0.9.

Table 4 shows the results of mean and standard deviation of the obtained best fitness values by pEA with different mutations and different p m. Between the two mutations, we find that pEA with M 2 performs better than pEA with M 1 if taking into account the results for all instances. The worst p m for M 2 is 0.5/d while the best p m for M 1 is 1/d. If comparing the results of M 2(0.5/d) and those of M 1(1/d), we see that M 2(0.5/d) wins in nine instances while M 1(1/d) wins in two instances, indicating M 2 is more effective than M 1. In addition, having a look at M 2 with different p m, we also find that the mean and SD become better and better with p m changing from 0.5/d to 2/d. This is because when mutating a BU, M 2 makes sure that the rebuilt BU does not increase the amount of coding operations to the corresponding chromosome. On the contrary, it is possible that coding at one or more nodes of a chromosome is eliminated after M 2. Hence, imposing reasonably more M 2 operations to the evolving population is more likely to obtain a better optimization performance of pEA. We hereafter only use the greedy mutation as the mutation operator in our pEA.

To further support our findings, we compare different mutations with different p m by using Student’s t-test (see Section 4.3), where results are given in Table 5. The result of comparison between A1↔A2 is shown as ‘+’, ‘−’, or ‘∼’ when A1 is significantly better than, significantly worse than, or statistically equivalent to A2, respectively. The table shows that M 2 is significantly better than M 1 in nine instances and statistically equivalent to M 1 in the remaining instances, which undoubtedly reflects the superiority of M 2 over M 1. Moreover, M 2 with a larger p m performs better than M 2 with a smaller p m. However, their performances do not differ too much. For example, between M 2(2/d) and M 2(1/d), the former only wins two instances.

The results of the successful ratio and average computational time are collected in Table 6. For the successful ratio, the results match our findings from Table 4, where M 2 is better than M 1 and a larger p m results into a better performance of M 2. For the average computational time, we find that the computational complexity of mutation is higher than that of evaluation.

In general, fitness evaluation is assumed to be the most time-consuming operation compared with other operations such as selection, crossover and mutation for highly complex optimization problems. However, the above assumption is no longer held in pEA (without the LS operator) where mutation takes a comparable larger computation time over the fitness evaluation. In mutations (ie M 1 and M 2), computation is spent on two steps, that is, the removal of some auxiliary links from the decomposed graph G D and the reconstruction of a new BU. The max-flow algorithm in Goldberg (1985) is used, leading to a time complexity of O(|V D|2·|E D|1/2), where |V D| and |E D| are the number of nodes and links in G D, respectively. Compared with the reconstruction of the BU, the removal of auxiliary links consumes very limited computation and can be ignored. Hence, to mutate a chromosome (no matter M 1 or M 2), we require a complexity of O M, where O M=O(p m·d·|V D|2·|E D|1/2). In contrast, to evaluate a chromosome, we only need to obtain the NCM subgraph G NCM of this chromosome and check how many outgoing auxiliary nodes perform coding in G NCM. As mentioned in Section 3.3, each G NCM consists of d BUs, each of which contains R paths, for example p i (s, t k ) is the ith path of the kth BU. Let L ik be the string length of p i (s, t k ) in the chromosome. To obtain a G NCM from the corresponding chromosome, the amount of computation involved is ∑ i ∑ k L ik , where L ik <|V D|. We assume there are Y outgoing auxiliary nodes in G D where Y<|V D| since at least the source and receivers are not decomposed. To check the status of all outgoing auxiliary nodes in G NCM, the amount of computation involved is Y. Therefore, to evaluate a chromosome requires a complexity of O E<O(|V D|2)<O M.

According to the above finding, the computational time in pEA is mainly spent on the mutation operations during the evolution. Hence, the computational time of pEA should be proportional to the amount of mutation operations. Let us take some examples to show the linear relationship between them. Note that pEA stops at two conditions, either a chromosome without coding is found or a predefined number of generations is reached. To show if the computational time changes proportionally to the amount of mutation operations during the evaluation, we should look at those instances where the successful ratios for different mutation rates are all 0%. In these instances the amount of mutation operations for different p m is proportional and we only need to check if the computational time is also proportional. Taking instance Fix-3 as an example, theoretically, the ratio of the amount of mutations during the evolution for M 2(2/d), M 2(1/d) and M 2(0.5/d) is 4:2:1. In practice, the ratio of the average computational time of M 2(2/d), M 2(1/d) and M 2(0.5/d) are calculated as 3.58:1.71:1.00 which is similar to the theoretical ratio.

4.6. Comparisons of different encoding approaches

In this section, we show the superiority of the path-oriented encoding over other existing encoding approaches by comparing the performance of three EAs, that is pEA, GA with BLS encoding (BLSGA) and GA with BTS encoding (BTSGA). For the BLS and BTS encoding approaches, please see Kim et al (2007b) and Section 3.2 for details. Note that an all-one chromosome is inserted into the initial population of BLSGA and BTSGA to make sure they begin with at least one feasible solution; otherwise, the two GAs may never converge since no feasible solution may be obtained during the search (Kim et al, 2007b). This has showed to be an effective method in previous work (Kim et al, 2007a, 2007b; Xing and Qu, 2011a, 2011b, 2012, 2013).

The comparison is based on a standard GA framework, where genetic operators in each EA include selection, crossover and mutation. The population size and the tournament size are set to 20 and 2 for each algorithm, respectively. In pEA, we use the greedy mutation and set p c=0.9 and p m=1/d. We adopt the best parameter settings for BLSGA and BTSGA in Kim et al (2007b). In BLSGA, p c=0.8 and p m=0.006. In BTSGA, p c=0.8 and p m=0.012. Besides, BLSGA and BTSGA use the uniform crossover with a mixing ratio of 0.5 and a simple mutation where each bit of a chromosome is flipped at p m.

The performance comparisons of EAs with different encodings are shown in Table 7. Besides, the t-test results are provided in Table 8. Undoubtedly, pEA achieves better optimization results and consumes less ACT than BLSGA and BTSGA in almost all instances.

To show the convergence of the three EAs, we plot the evolution of the best fitness in each generation, averaged over 50 runs for two fixed and four random instances, as shown in Figure 10. First, we can see that pEA always obtains better initial solutions than BLSGA and BTSGA. For example, in Figure 10(a), at the beginning of the evolution, the average best fitness for pEA is around 7 while those of BLSGA and BTSGA are both 11. Moreover, we find that pEA converges very fast especially in the early generations. To find a good solution, pEA needs much less generations than BLSGA and BTSGA. This is an outstanding advantage of pEA especially in real-time and dynamic applications, where a decent solution must be found within a very short time.

Based on the analysis above, we conclude that the path-oriented encoding is more efficient than the BLS and BTS encodings in terms of global optimization, convergence and computational time.

4.7. The effectiveness of the LS operator

As discussed in Section 3.7, a LS operator is applied to a randomly chosen chromosome at each generation to improve the solution quality. To verify the effectiveness of this operator, we randomly construct five chromosomes for each instance by using the initialization method in Section 3.4. We apply the LS operator on each chromosome and compare the fitness values of the chromosome before and after implementing the LS operator, that is ΦBEF and ΦAFT. Let X and X′ denote the chromosome before and after the LS, and E A(X) and E A(X′) be the set of auxiliary links owned by X and X′, respectively. We define the structural difference coefficient (SDC) ρ between X and X′ according to the Marczewski-Steinhaus concept of distance (Marczewski and Steinhaus, 1958), as follows:

The value of SDC is between 0.0 and 1.0, which tells us to what degree X and X′ are different, showing the effect of LS operator on the structure change of solutions. A larger SDC indicates a severer structural change caused by the LS operator.

The experimental results of ΦBEF, ΦAFT and ρ are shown in Table 9. First, it is seen that ΦEND is smaller than ΦSTART especially for instances Fix-3,4, showing that the LS operator can improve the quality of chromosomes. Meanwhile, regarding the values of ρ in all instances, 32 chromosomes (45% of the 70 chromosomes) are at least 30% different on the structure, meaning the LS operator may also help to introduce extra diversity to the population.

4.8. Overall performance evaluation

This section evaluates the overall performance of pEA by comparing it with six state-of-the-art algorithms in the literature. The following explains the algorithms for comparison.

-

GA1: BLS encoding-based GA (Kim et al, 2007b). Different from BLSGA used in Section 4.6, GA1 employs a greedy sweep operator after the evolution to further improve the quality of the best solution found by flipping each of the remaining 1’s to 0 if it does not result into an infeasible solution.

-

GA2: BTS encoding-based GA (Kim et al, 2007b). The same greedy sweep operator is applied at the end of evolution as in GA1.

-

QEA1: Quantum-inspired evolutionary algorithm (QEA) (Xing et al, 2010). QEA maintains a population of quantum-bit chromosomes. Each chromosome is a probabilistic distribution model over the solution space. Each sampling on a chromosome results into a solution. Rotation angle step (RAS) and quantum mutation probability (QMP) are used to update each chromosome. QEA1 is based on the BLS encoding. For each chromosome, the RAS value is randomly generated and the QMP value is set according to the current fitness of the chromosome.

-

QEA2: Another QEA proposed by Ji and Xing (2011). The main difference between QEA2 and QEA1 is that in QEA2 the RAS and QMP values of a chromosome are adjusted according to the current and previous fitness values of the chromosome.

-

PBIL: Population-based incremental learning algorithm (Xing and Qu, 2011a). BLS encoding is used. PBIL maintains a real-valued probability vector (PV) which, when sampled, produces promising solutions with higher probabilities. At each generation, the statistic information of high-quality samples is used to update the PV. A restart scheme is introduced to help PBIL to escape from local optima.

-

cGA: Compact genetic algorithm (Xing and Qu, 2012). Similar to PBIL, cGA also maintains a PV. However, the PV in cGA is only sampled once at each generation. The new sample is compared with the best-so-far sample and between the two the winner is used to update the PV. Based on BLS encoding, cGA is featured by a restart scheme and a local search operator.

-

pEA1: the path-oriented encoding EA. Note that LS operator is excluded. The performance of pEA1 will demonstrate the pure evolutionary search ability of the proposed algorithm.

-

pEA2: pEA1 with LS operator, which indicates the overall performance of the proposed algorithm.

The population size is set to 20 for each algorithm. For GA1, we set p c=0.8 and p m=0.006. For GA2, we have p c=0.8 and p m=0.012. For QEA1, QEA2, PBIL and cGA, we adopt their best parameter settings (Xing et al, 2010; Ji and Xing, 2011; Xing and Qu, 2011a, 2012). For pEA\LS and pEA, we set p c=0.9 and p m=1/d, where d is the number of receivers.

The comparison results are collected in Table 10, where the best results in mean are in bold. First, we analyse the data in Mean and SR for each algorithm. It can be seen that pEA2 always performs the best in each instance while cGA is the second best. The third best algorithm is PBIL. Compared with QEA1 and QEA2, PBIL performs better in six instances (see Fix-2,3 and Rnd-5,7,9,10) and worse in two instances (see Fix-4 and Rnd-8). The comparison of pEA1 and pEA2 illustrates that the LS operator helps to improve the overall performance of the proposed algorithm. In some cases the improvement is substantial, for example the mean and SR in instances Fix-2,3,4. When comparing pEA1 with the existing algorithms, we can see that in fix networks, pEA1 has similar performance with GA1. In random networks, pEA1 gains similar performance with PBIL except for instances Rnd-8,9 and illustrates better performance than GAs and QEAs in most instances.

Next, we compare the ACT of the algorithms. Before analysing the data, we divide the 14 instances into two groups according to their PIS values (see Section 4.2). Those with a PIS value less than 100% belong to the first group (called easy instances) while the rest belong to the second group (called hard instances). Easy instances includes Fix-1 and Rnd-1,2,3,4,6 while hard instances are Fix-2,3,4 and Rnd-5,7,8,9,10. Regarding easy instances, one can find that more than half of the state-of-the-art algorithms (GA1, GA2, QEA1, QEA2, PBIL, and cGA) can find an optimal solution with a successful ratio of 100%. As for hard instances, most of the state-of-the-art algorithms have a lower successful ratio than 100%. In easy instances, most of algorithms can obtain an optimal solution within a short time (eg less than 1 s). However, in each hard instance, the ACT spent by each algorithm differs significantly. In easy instances, QEA1, QEA2, PBIL, cGA, pEA1 and pEA2 all consume similar ACT (ie less than 1 s) while GA1 and GA2 are the worst two. In hard instances, pEA2 and cGA are the two fastest algorithms. Besides, the former costs significantly less time than the latter in instances Fix-3,4 and Rnd-5,8,9,10. pEA1 is the third fastest algorithm. The difference between pEA1 and pEA2 indicates the effectiveness of the LS operator in reducing the computational time.

Regarding the overall performance in Table 10, we see that pEA2 is the best among the eight algorithms. Besides, pEA1 has similar performance with GA1 in fix networks and PBIL in random networks, respectively. Meanwhile, the LS operator has a positive impact on improving the overall performance of the proposed algorithm. To further support the finding, we show the t-test results comparing pEA2 and pEA1 with the others in Table 11.

5. Conclusions

This paper investigates the network coding resource minimization problem and develops a path-oriented encoding evolutionary algorithm (pEA) based on a new encoding approach. Different from the existing EAs which are based on the BLS or BTS encodings, the new EA is based on path-oriented encoding. Each chromosome consists of a number of BUs, each of which contains a set of link-disjoint paths from the source to the same receiver. In accordance to the new encoding approach, we develop the associated initialization, crossover and two mutation operators in the proposed EA. It is observed that between the two proposed mutation operators, the greedy mutation is more likely to result into a better performance than the ordinary mutation. Besides, a problem-specific local search operator is also developed to improve the solution quality. The simulation results show that the proposed pEA outperforms six existing state-of-the-art algorithms regarding the best solutions obtained and the computational time consumed, due to the new path-oriented encoding and the associated operators designed accordingly.

References

Ahlswede R, Cai N, Li SYR and Yeung RW (2000). Network information flow. IEEE Transactions on Information Theory 46 (4): 1204–1216.

Ahn CW (2011). Fast and adaptive evolutionary algorithm for minimum-cost multicast with network coding. Electronics Letters 47 (12): 700–701.

Ahn CW and Ramakrishna RS (2002). A genetic algorithm for shortest path routing problem and the sizing of populations. IEEE Transactions on Evolutionary Computation 6 (6): 566–579.

Cheng H and Yang S (2008). A genetic-inspired joint multicast routing and channel assignment algorithm in wireless mesh networks. In: Proceedings of the 2008 UK Workshop on Computational Intelligence, Leicester, UK: 2008 UK Workshop on Computational Intelligence. pp 159–164.

Cheng H and Yang S (2010a). Multi-population genetic algorithms with immigrants scheme for dynamic shortest path routing problems in mobile ad hoc networks. In: C Di Chio et al. (eds), EvoApplications 2010, Part I, LNCS 6024, Istanbul, Turkey. Springer-Verlag: Berlin, Heidelberg, pp 562–571.

Cheng H and Yang S (2010b). Genetic algorithms with immigrants schemes for dynamic multicast problems in mobile ad hoc networks. Engineering Applications of Artificial Intelligence 23 (5): 806–819.

Goldberg AV (1985). A new max-flow algorithm. MIT Technical Report MIT/LCS/TM-291, Laboratory for Computer Science, MIT.

Ji Y and Xing H (2011). A memory-storable quantum-inspired evolutionary algorithm for network coding resource minimization. Evolutionary Algorithms: 363–380.

Kim M, Ahn CW, Médard M and Effros M (2006). On minimizing network coding resources: An evolutionary approach. In: Proceedings of Second Workshop on Network Coding, Theory, and Applications (NetCod2006), Boston, MA.

Kim M et al (2007a). Evolutionary approaches to minimizing network coding resources. In: Proceedings of 26th IEEE International Conference on Computer Communications (INFOCOM2007), Anchorage, AK, pp 1991–1999.

Kim M, Aggarwal V, Reilly VO, Médard M and Kim W (2007b). Genetic representations for evolutionary minimization of network coding resources. In: Proceedings of EvoWorkshops 2007, LNCS, Vol. 4448, Valencia, Spain, pp 21–31.

Langberg M, Sprintson A and Bruck J (2006). The encoding complexity of network coding. IEEE Transactions on Information Theory 52 (6): 2386–2397.

Li SYR, Yeung RW and Cai N (2003). Linear network coding. IEEE Transactions on Information Theory 49 (2): 371–381.

Luong HN, Nguyen HTT and Ahn CW (2012). Entropy-based efficiency enhancement techniques for evolutionary algorithms. Information Sciences 188: 100–120.

Marczewski E and Steinhaus H (1958). On a certain distance of sets and the corresponding distance of functions. Colloquium Mathematicum 6 (1): 319–327.

Miller CK (1998). Multicast Networking and Applications. Pearson Education: Toledo, OH.

Mitchell M (1996). An Introduction to Genetic Algorithms. MIT Press: Cambridge, MA.

Oh S, Ahn CW and Ramakrishna RS (2006). A genetic-inspired multicast routing optimization algorithm with bandwidth and end-to-end delay constraints. In: I King et al. (eds), ICONIP 2006, Part III, LNCS 4234. Springer-Verlag: Berlin Heidelberg 2006, pp 807–816.

Palmer CC and Kershenbaum A (1994). Representing trees in genetic algorithms. In: Proceedings of the First IEEE Conference on Evolutionary Computation, Orlando, FL, pp 379–384.

Siregar JH, Zhang Y and Takagi H (2005). Optimal multicast routing using genetic algorithm for WDM optical networks. IEICE Transactions on Communications E88-B (1): 219–226.

Walpole RE, Myers RH, Myers SL and Ye K (2007). Probability and Statistics for Engineers and Scientists. Pearson Education: Boston, MA.

Xing H and Qu R (2011a). A population based incremental learning for network coding resources minimization. IEEE Communications Letters 15 (7): 698–700.

Xing H and Qu R (2011b). A population based incremental learning for delay constrained network coding resource minimization. In: Proceedings of Evo Applications 2011, Turin, Italy, pp 51–60.

Xing H and Qu R (2012). A compact genetic algorithm for the network coding based resource minimization problem. Applied Intelligence 36 (4): 809–823.

Xing H and Qu R (2013). A nondominated sorting genetic algorithm for bi-objective network coding based multicast routing problems. Information Sciences 233: 36–53.

Xing H, Ji Y, Bai L and Sun Y (2010). An improved quantum-inspired evolutionary algorithm for coding resource optimization based network coding multicast scheme. AEU-International Journal of Electronics and Communications 64 (12): 1105–1113.

Yang S and Yao X (2005). Experimental study on population-based incremental learning algorithms for dynamic optimization problems. Soft Computing 9 (11): 815–834.

Yang S, Cheng H and Wang F (2010). Genetic algorithms with immigrants and memory schemes for dynamic shortest path routing problems in mobile Ad Hoc networks. IEEE Transactions on Systems, Man, and Cybernetics-Part C: Applications and Reviews 40 (1): 52–63.

Acknowledgements

This work was supported in part by the China Scholarship Council, The University of Nottingham, National Natural Science Foundation of China (Grant No. 71001055) and Zhejiang Provincial Natural Science Foundation (Grant No. Y1100132).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Xing, H., Qu, R., Kendall, G. et al. A path-oriented encoding evolutionary algorithm for network coding resource minimization. J Oper Res Soc 65, 1261–1277 (2014). https://doi.org/10.1057/jors.2013.79

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1057/jors.2013.79